# null

Source: https://evalprotocol.io/community

Eval Protocol is an open standard for AI evaluation that helps developers build

better AI products through robust testing and iteration.

Most AI evaluation frameworks are proprietary or organization-specific, leading to:

* Duplicated evaluation code across teams

* Inconsistent benchmarking standards

* Limited access to proven evaluation methodologies

* Slow iteration cycles without community feedback

Our protocol standardizes AI evaluation, enabling you to:

* Share and reuse evaluation logic across projects

* Benchmark against established baselines

* Iterate faster with community-driven improvements

* Build reproducible evaluation pipelines

* Access evaluation tools used by production AI systems

Join [#eval-protocol](https://discord.com/channels/1137072072808472616/1400975572405850155) on Discord to discuss implementations, share evaluation strategies, and contribute to the standard.

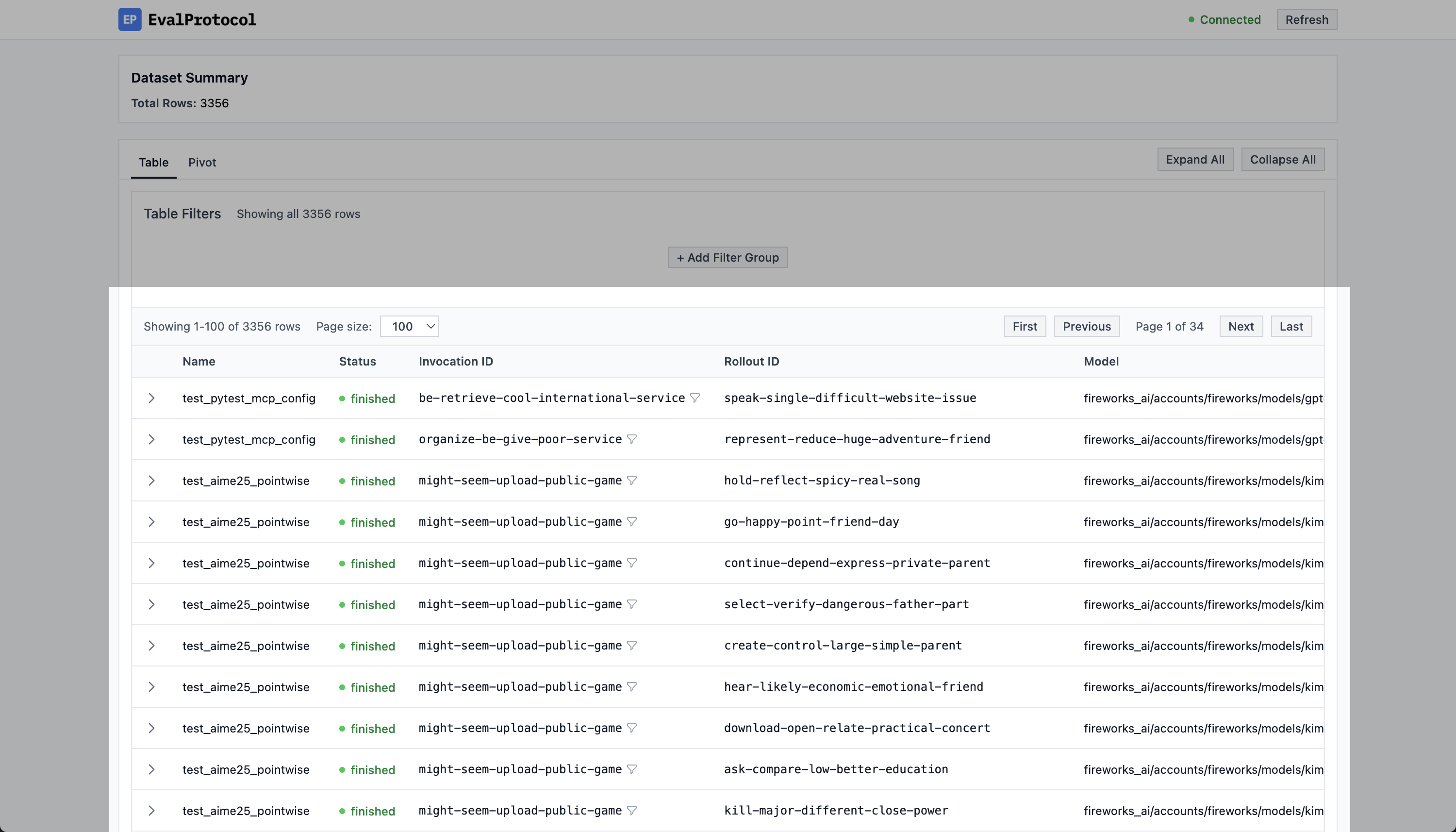

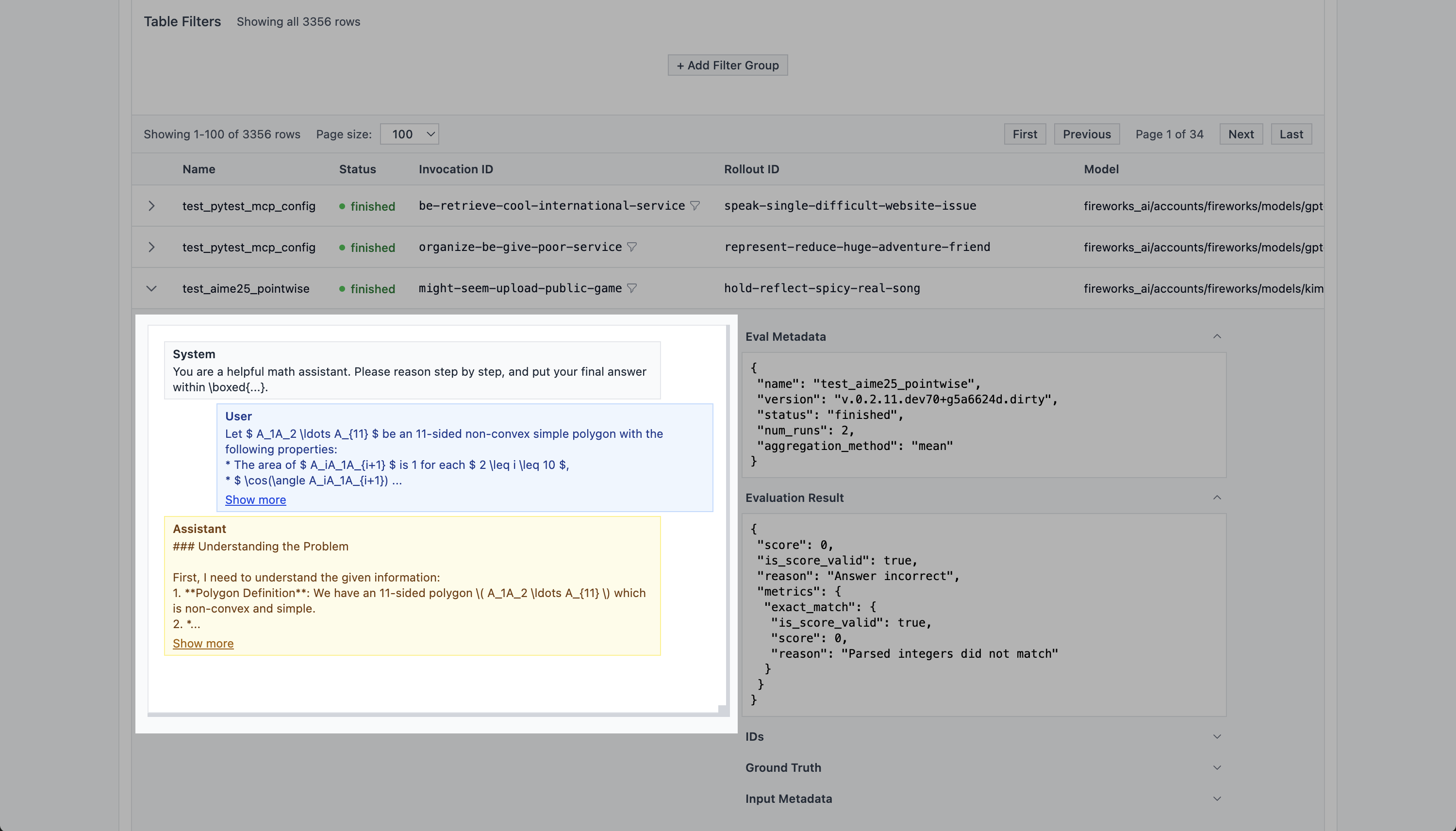

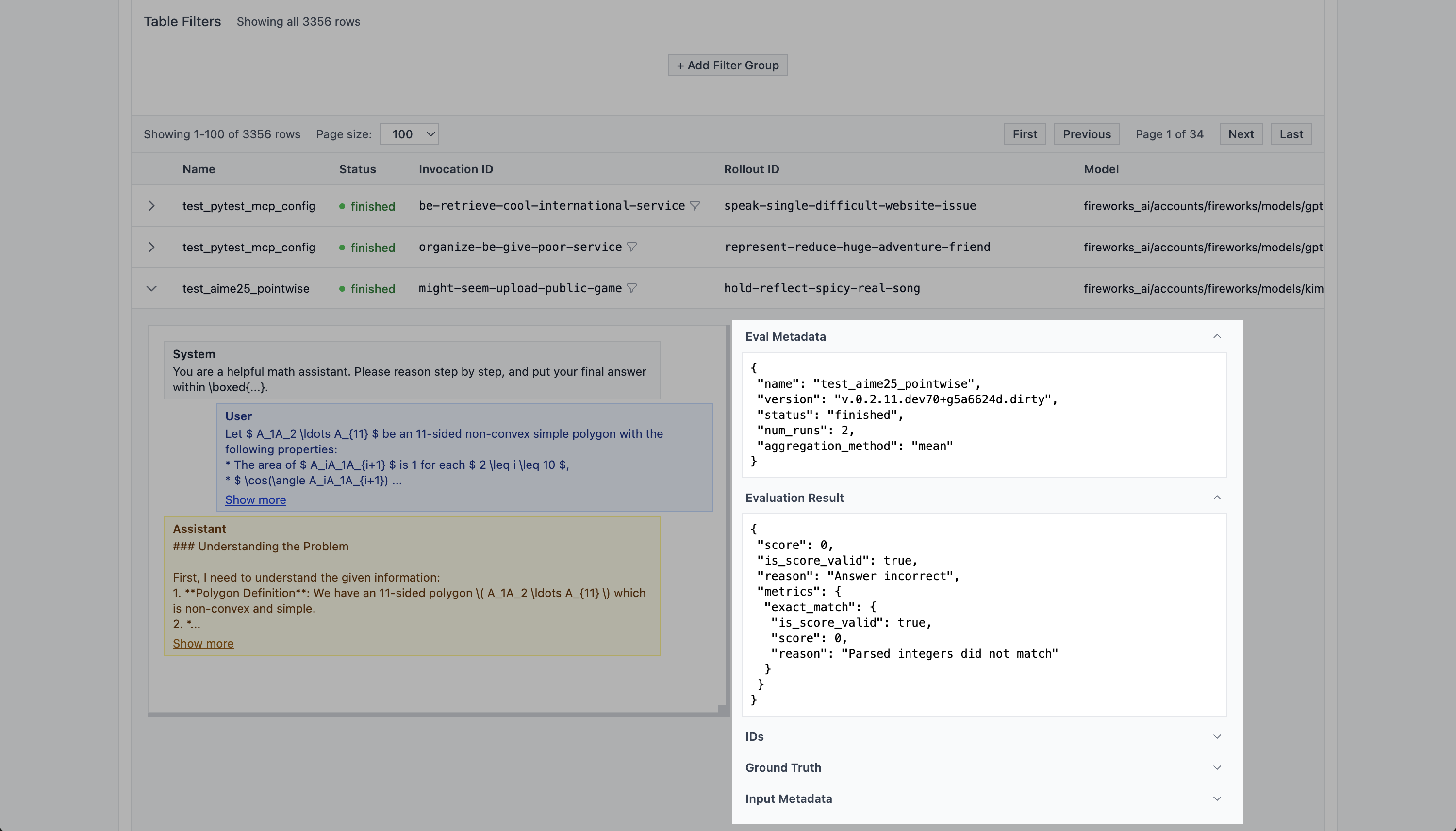

# AIME 2025 (Open-Resource)

Source: https://evalprotocol.io/example/aime2025

Quick AIME-style math check using boxed final answers

This example wires up a lightweight AIME-style evaluation using the open `AIME2025` JSONL from Hugging Face. It is intended for quick model picking rather than a full reimplementation of the benchmark.

This example is now implemented as a suite in `eval_protocol/benchmarks/suites/aime25.py` and exported as `aime25`.

## What it does

* Pulls AIME2025 JSONL directly from Hugging Face.

* Prompts the model to reason and place the final answer inside `\\boxed{...}`.

* Parses the boxed value and compares it against ground truth for exact match scoring.

## How it’s configured

Key pieces in the SDK example:

* Dataset adapter converts raw rows with `question` and `answer` into `EvaluationRow`s.

* `@evaluation_test` provides URLs, model, and rollout parameters (including optional reasoning-effort variants).

* Evaluator extracts a final integer from the assistant message and checks equality with the ground truth.

## Run it locally

After installing eval-protocol, you can run the benchmark from anywhere:

```bash

pytest --pyargs eval_protocol.benchmarks.test_aime25 -v \

--ep-print-summary --ep-summary-json artifacts/aime25.json

```

Tip: use `--ep-max-rows=50` to limit dataset size, or `--ep-max-rows=all` for the full dataset. You can also use `--ep-reasoning-effort=high` and `--ep-input-param temperature=0.0` to adjust model settings.

## Notes

* This is a convenience wrapper for model selection, not a canonical reproduction of AIME.

* The evaluation is strict exact match over a parsed integer from `\\boxed{...}`.

# APPS Coding Evaluation

Source: https://evalprotocol.io/example/apps-coding

Evaluate competitive programming abilities using APPS dataset with comprehensive test suites

This example demonstrates how to create comprehensive competitive programming evaluations using the APPS (Automated Programming Progress Standard) dataset from CodeParrot. The evaluation tests AI models' ability to solve complex algorithmic challenges similar to those found in competitive programming contests.

You can find the complete code for this example at [test\_apps\_coding.py](https://github.com/eval-protocol/python-sdk/blob/main/tests/pytest/test_apps_coding.py).

## Understanding APPS Coding Evaluation

APPS coding evaluation assesses a model's ability to:

* **Solve complex algorithmic problems**: Handle competitive programming challenges with multiple constraints

* **Implement sophisticated logic**: Design algorithms for graph theory, dynamic programming, and data structures

* **Handle multiple test cases**: Pass comprehensive test suites with edge cases and boundary conditions

* **Work with competitive formats**: Process standard input/output formats used in programming contests

Unlike basic coding tasks that test simple function implementation, APPS evaluation tests **advanced algorithmic thinking and competitive programming skills** - essential for building AI systems capable of complex problem-solving.

## Understanding the APPS Dataset Structure

The APPS dataset from CodeParrot contains 10,000 competitive programming problems sourced from platforms like Codeforces, AtCoder, Kattis, and Codewars, providing realistic algorithmic challenges at three difficulty levels.

### Dataset Format

Each entry in the APPS dataset contains:

* **`problem_id`**: Unique identifier for the problem

* **`question`**: Detailed problem description with constraints, examples, and input/output format

* **`solutions`**: Array of reference Python solutions that correctly solve the problem

* **`input_output`**: JSON containing comprehensive test cases with inputs and expected outputs

* **`difficulty`**: Classification as "introductory", "interview", or "competition"

* **`url`**: Source URL of the original problem from competitive programming platforms

* **`starter_code`**: Optional template code to begin implementation

### Example APPS Dataset Entry

**Competitive Programming Problem:**

```json

{

"id": 1,

"question": "Mikhail walks on a Cartesian plane. He starts at the point $(0, 0)$, and in one move he can go to any of eight adjacent points. For example, if Mikhail is currently at the point $(0, 0)$, he can go to any of the following points in one move: $(1, 0)$; $(1, 1)$; $(0, 1)$; $(-1, 1)$; $(-1, 0)$; $(-1, -1)$; $(0, -1)$; $(1, -1)$.\n\nIf Mikhail goes from the point $(x1, y1)$ to the point $(x2, y2)$ in one move, and $x1 \ne x2$ and $y1 \ne y2$, then such a move is called a diagonal move.\n\nMikhail has $q$ queries. For the $i$-th query Mikhail's target is to go to the point $(n_i, m_i)$ from the point $(0, 0)$ in exactly $k_i$ moves...",

"solutions": [

"q=int(input())\n\nfor e in range(q):\n x,y,k=list(map(int,input().split()))\n x,y=abs(x),abs(y)\n x,y=max(x,y),min(x,y)\n # ... complete solution"

],

"input_output": {

"inputs": [

"3

2 2 3

4 3 7

10 1 9"

],

"outputs": [

"1

6

-1"

]

},

"difficulty": "interview",

"url": "https://codeforces.com/problemset/problem/1036/B",

"starter_code": ""

}

```

### Dataset Characteristics

**Problem Complexity**: APPS problems feature advanced algorithmic concepts:

* **Graph algorithms**: Shortest paths, minimum spanning trees, graph traversal

* **Dynamic programming**: Optimization problems with overlapping subproblems

* **Data structures**: Advanced usage of heaps, trees, and custom data structures

* **Mathematical algorithms**: Number theory, combinatorics, and geometric problems

* **String algorithms**: Pattern matching, string manipulation, and parsing

**Difficulty Progression**:

* **Introductory (2,889 problems)**: Basic algorithmic concepts and simple implementations

* **Interview (3,592 problems)**: Common coding interview problems with moderate complexity

* **Competition (572 problems)**: Advanced competitive programming challenges

**Test Coverage**: Comprehensive testing ensures robust evaluation:

* **Multiple test cases**: Average of 21.2 test cases per problem

* **Edge cases**: Boundary conditions and corner cases included

* **Performance constraints**: Problems include time and memory limits

* **Real contest data**: Authentic test cases from actual programming competitions

**Sample Dataset**: The EP python-sdk includes a sample APPS dataset with just 3 problems for testing and demonstration purposes. The full CodeParrot APPS dataset contains 10,000 problems across all difficulty levels.

## Step 1: Import Required Dependencies

First, we import the necessary modules from the EP framework:

```python

import json

from typing import Any, Dict, List

from eval_protocol.models import EvaluateResult, EvaluationRow, Message

from eval_protocol.pytest import SingleTurnRolloutProcessor, evaluation_test

from eval_protocol.rewards.apps_coding_reward import evaluate_apps_solution

```

* `json`: For parsing the complex input/output test case data

* `typing`: Python's typing module for type hints

* `EvaluateResult`, `EvaluationRow`, `Message`: Core EP data structures

* `default_single_turn_rollout_processor`: Default processor for single-turn conversations

* `evaluation_test`: Decorator for configuring evaluation tests

* `evaluate_apps_solution`: Specialized function for evaluating APPS competitive programming solutions

## Step 2: Create the Dataset Adapter

We need to convert the APPS dataset format to the EP's expected format:

```python

def apps_dataset_to_evaluation_row(data: List[Dict[str, Any]]) -> List[EvaluationRow]:

"""

Convert entries from APPS dataset to EvaluationRow objects.

This adapter extracts the problem statement and stores the comprehensive

test cases (input/output pairs) as ground truth for evaluation.

Args:

data: List of APPS dataset entries with problem descriptions and test cases

Returns:

List of EvaluationRow objects ready for evaluation

"""

return [

EvaluationRow(

messages=[Message(role="user", content=row["question"])],

ground_truth=row["input_output"]

)

for row in data

]

```

This adapter:

* Uses the complete problem description as the user message

* Stores the JSON test case data as ground truth for comprehensive evaluation

* Preserves the complex input/output format required for competitive programming

* Creates proper Message objects for the evaluation framework

**Key transformations:**

* **Problem preservation**: Maintains full problem statements with constraints and examples

* **Test case handling**: Preserves multiple test cases with complex input/output formats

* **Ground truth format**: Keeps JSON structure for sophisticated evaluation logic

## Step 3: Configure and Run the Evaluation

We use the `@evaluation_test` decorator to configure the APPS evaluation:

```python

@evaluation_test(

input_dataset=["tests/pytest/data/apps_sample_dataset.jsonl"],

dataset_adapter=apps_dataset_to_evaluation_row,

completion_params=[{"model": "accounts/fireworks/models/kimi-k2-instruct", "temperature": 0.0, "max_tokens": 4096}],

passed_threshold=0.33,

rollout_processor=SingleTurnRolloutProcessor(),

num_runs=1,

mode="pointwise",

)

def test_apps_code_evaluation(row: EvaluationRow) -> EvaluationRow:

"""

Evaluation function that tests APPS coding problems using evaluate_apps_solution.

Args:

row: EvaluationRow containing the conversation messages and ground_truth as JSON string

Returns:

EvaluationRow with the evaluation result

"""

# Use evaluate_apps_solution directly

result = evaluate_apps_solution(

messages=row.messages,

ground_truth=row.ground_truth,

)

# Set the evaluation result on the row

row.evaluation_result = result

return row

```

**Configuration parameters:**

* `input_dataset`: Path to the APPS dataset JSONL file

* `model`: The model to evaluate (uses a capable model for complex problems)

* `rollout_input_params`: Model parameters with higher token limit for complex solutions

* `threshold_of_success`: 33% success rate threshold (competitive programming is challenging)

* `mode`: `pointwise` for evaluating individual problems independently

* `dataset_adapter`: Function that converts APPS format to EvaluationRow objects

* `rollout_processor`: Uses default single-turn processor

**Evaluation process:**

1. **Problem presentation**: Present the full competitive programming problem to the model

2. **Solution generation**: Model generates a complete algorithmic solution

3. **Code extraction**: Extract Python code from the model's response

4. **Comprehensive testing**: Run solution against all test cases in the problem

5. **Pass rate calculation**: Calculate percentage of test cases passed

## Core Functions Explained

### `evaluate_apps_solution` Function

The `evaluate_apps_solution` function is a specialized evaluation function designed for competitive programming problems that handles complex test case execution and scoring.

**Key Features:**

* **Code extraction**: Identifies and extracts Python code from model responses

* **Test case parsing**: Processes JSON test case data with multiple input/output pairs

* **Secure execution**: Runs code safely with timeouts and resource limitations

* **Comprehensive scoring**: Calculates pass rates across all test cases

* **Error handling**: Provides detailed feedback on compilation and runtime errors

* **Competitive format support**: Handles standard input/output format used in contests

**Function Signature:**

```python

def evaluate_apps_solution(

messages: List[Message],

ground_truth: Optional[str],

**kwargs

) -> EvaluateResult:

```

**Parameters:**

* `messages`: List of conversation messages (problem statement from user, solution from assistant)

* `ground_truth`: JSON string containing test cases with inputs and expected outputs

* `**kwargs`: Additional parameters including execution timeout settings

**Return Value:**

* `EvaluateResult` with pass rate score (0.0 to 1.0) and detailed metrics

### Implementation Details

The `evaluate_apps_solution` function implements a comprehensive evaluation pipeline with robust security and error handling:

**1. Code Extraction Process:**

````python

# Extract Python code from model response

code_solution = _extract_python_code(raw_solution_content)

# Handles various response formats:

# - Markdown code blocks: ```python ... ```

# - Inline code snippets

# - Mixed text and code responses

# - Removes verbose explanations and comments

````

**2. Ground Truth Processing:**

```python

# Parse JSON test case data

if isinstance(ground_truth, str):

in_outs = json.loads(ground_truth) # Parse JSON string

elif isinstance(ground_truth, dict):

in_outs = ground_truth # Already parsed by JSONL loader

# Validate required structure

assert "inputs" in in_outs and "outputs" in in_outs

```

**3. Secure Test Execution:**

The evaluation uses sandboxed execution with comprehensive security measures:

```python

# Force standard input execution path and prepare secure environment

in_outs_for_check = in_outs.copy()

if "fn_name" in in_outs_for_check:

del in_outs_for_check["fn_name"] # Use stdin/stdout testing

# For each test case in the problem:

for i, (test_input, expected_output) in enumerate(zip(inputs, outputs)):

# Prepare secure execution environment

wrapped_code = f"""

import sys

sys.setrecursionlimit(6*10**5)

{standard_imports} # Common competitive programming imports

{user_generated_code}

"""

# Execute in isolated subprocess with resource limits

process = subprocess.run(

[sys.executable, "-c", wrapped_code],

input=test_input,

capture_output=True,

timeout=timeout,

text=True

)

# Compare outputs and record result

if process.returncode == 0:

actual_output = process.stdout.strip()

results.append(actual_output == expected_output.strip())

else:

results.append(False) # Runtime error

```

**Security Features:**

* **Sandboxed execution**: Code runs in isolated subprocess with resource limits

* **Standard I/O redirection**: Test inputs via stdin, outputs captured from stdout

* **Security restrictions**: File system access, network operations, and dangerous imports disabled

* **Resource monitoring**: Memory usage, CPU time, and execution duration tracked

* **Timeout enforcement**: Long-running or infinite loops automatically terminated

**4. Scoring and Error Analysis:**

```python

# Calculate pass rate from results

actual_results = results_list # List of True/False for each test case

num_tests = len(actual_results)

passed_count = sum(1 for res in actual_results if res is True)

score = float(passed_count) / num_tests

# Process execution metadata for detailed error reporting

if exec_metadata_list:

if len(exec_metadata_list) == 1 and exec_metadata_list[0].get("error"):

# Global compilation error

reason_msg += f" Execution Error: {exec_metadata_list[0]['error']}"

elif score == 0.0 and exec_metadata_list[0].get("error_message") == "Wrong Answer":

# Detailed failure analysis with specific test case details

first_fail_meta = exec_metadata_list[0]

reason_msg += (

f". First fail details: Inputs: {first_fail_meta.get('inputs', 'N/A')}, "

f"Expected: {first_fail_meta.get('expected', 'N/A')}, "

f"Got: {first_fail_meta.get('output', 'N/A')}"

)

```

**Error Handling Hierarchy:**

1. **Code extraction failure**: Score 0.0 - No valid Python code found

2. **Compilation errors**: Score 0.0 - Syntax errors prevent execution

3. **Runtime errors**: Per-test-case failure - Exceptions during execution

4. **Timeout errors**: Per-test-case failure - Exceeded time limits

5. **Wrong output**: Per-test-case failure - Incorrect results but valid execution

6. **Perfect execution**: Score 1.0 - All test cases pass with correct outputs

**Result Types:**

* **True**: Test case passed with correct output

* **False**: Test case failed (wrong output)

* **-1**: Runtime error or timeout

* **-2**: Compilation error

**Example Evaluation Flow:**

```python

# Problem: Mikhail's diagonal moves (from example above)

# Model generates solution

result = evaluate_apps_solution(

messages=[Message(role="user", content=problem_description)],

ground_truth='{"inputs": ["3\\n2 2 3\\n4 3 7\\n10 1 9\\n"], "outputs": ["1\\n6\\n-1\\n"]}'

)

# Result might be:

# EvaluateResult(

# score=1.0, # All test cases passed

# reason="Passed 1/1 test cases",

# metrics={

# "pass_rate": MetricResult(score=1.0, reason="1/1"),

# "execution_metadata": MetricResult(...)

# }

# )

```

## Evaluation Scenarios and Results

The APPS coding evaluation handles various competitive programming scenarios:

### Perfect Solution (Score: 1.0)

**Scenario**: Model correctly solves all test cases

```python

# Problem: Mikhail's diagonal moves

# Model provides optimal solution using coordinate geometry

q = int(input())

for _ in range(q):

x, y, k = list(map(int, input().split()))

x, y = abs(x), abs(y)

# ... correct algorithm implementation

# Handles all coordinate movement constraints

# Result: ✅ Passed 3/3 test cases (100% success rate)

```

### Partial Solution (Score: 0.67)

**Scenario**: Model solves most test cases but fails on edge cases

```python

# Problem: Mikhail's diagonal moves

# Model has correct main logic but misses boundary condition

q = int(input())

for _ in range(q):

x, y, k = list(map(int, input().split()))

# ... mostly correct implementation

# Fails on impossible movement case

# Result: ⚠️ Passed 2/3 test cases (67% success rate)

```

### Algorithmic Error (Score: 0.0)

**Scenario**: Model uses incorrect algorithm approach

```python

# Problem: Mikhail's diagonal moves

# Model uses incorrect movement calculation

q = int(input())

for _ in range(q):

x, y, k = list(map(int, input().split()))

# Incorrect approach - doesn't consider diagonal optimization

print(k) # Always outputs k regardless of constraints

# Result: ❌ Passed 0/3 test cases - Wrong algorithmic approach

```

### Timeout Error (Score: 0.0)

**Scenario**: Model solution exceeds time limits

```python

# Problem: Mikhail's diagonal moves

# Model uses inefficient brute force instead of mathematical approach

q = int(input())

for _ in range(q):

x, y, k = list(map(int, input().split()))

# Simulates all possible paths - exponential complexity

# Times out on larger coordinate values

# Result: ❌ Execution timeout - Algorithm too slow for constraints

```

### Compilation Error (Score: 0.0)

**Scenario**: Model generates syntactically incorrect code

```python

# Problem: Mikhail's diagonal moves

# Model has syntax errors

q = int(input())

for _ in range(q) # Missing colon

x, y, k = list(map(int, input().split()))

# ... rest of solution

# Result: ❌ Compilation error: SyntaxError - Invalid Python syntax

```

## Conclusion

This APPS coding evaluation demonstrates how to assess AI models' competitive programming capabilities using comprehensive algorithmic challenges. The evaluation ensures models can understand complex problem statements, design efficient algorithms, and implement solutions that pass rigorous test suites.

This evaluation is particularly valuable for:

* **Algorithmic reasoning assessment**: Testing advanced problem-solving capabilities

* **Competitive programming preparation**: Validating solutions against contest-quality problems

* **Algorithm implementation**: Ensuring correct and efficient code generation

The APPS evaluation focuses on **algorithmic correctness and efficiency** rather than simple function implementation, making it essential for building AI systems capable of sophisticated problem-solving. It provides comprehensive testing with real competitive programming challenges and detailed performance metrics.

# Basic Coding Evaluation

Source: https://evalprotocol.io/example/basic-coding

Evaluate code correctness by executing Python functions and comparing outputs

This example demonstrates how to create comprehensive basic coding evaluations using the Eval Protocol (EP) framework. The evaluation uses code execution functions to test whether models can write correct Python functions that produce expected outputs when executed with specific inputs.

You can find the complete code for this example at [test\_basic\_coding.py](https://github.com/eval-protocol/python-sdk/blob/main/tests/pytest/test_basic_coding.py).

## Understanding Basic Coding Evaluation

Basic coding evaluation assesses a model's ability to:

* **Write syntactically correct code**: Generate valid Python syntax without errors

* **Implement correct logic**: Create functions that perform the specified operations

* **Handle different inputs**: Process various input values correctly (positive, negative, zero, edge cases)

* **Produce exact outputs**: Return results that match expected values precisely

Unlike text-based evaluations that focus on natural language generation, coding evaluations test a model's **programming capabilities and logical reasoning** - essential skills for AI systems that need to write functional code.

## Understanding the Dataset Structure

The basic coding dataset contains simple programming tasks that evaluate fundamental coding skills, from arithmetic operations to data structure manipulation.

### Dataset Format

Each entry in the dataset contains:

* **`prompt`**: The coding task description specifying what function to write

* **`input`**: Test input value to pass to the function

* **`expected_output`**: The correct output the function should return

### Example Dataset Entries

**Simple Addition Function:**

```json

{

"prompt": "Write a Python function `add_one` that takes an integer and returns the integer incremented by 1.",

"input": "5",

"expected_output": "6"

}

```

**Multiplication Function:**

```json

{

"prompt": "Write a Python function `multiply_by_two` that takes an integer and returns the integer multiplied by 2.",

"input": "3",

"expected_output": "6"

}

```

**List Operations:**

```json

{

"prompt": "Write a Python function `get_length` that takes a list and returns its length.",

"input": "[1, 2, 3]",

"expected_output": "3"

}

```

## Step 1: Import Required Dependencies

First, we import the necessary modules from the EP framework:

```python

from typing import Any, Dict, List

from eval_protocol.models import EvaluateResult, EvaluationRow, Message

from eval_protocol.pytest import SingleTurnRolloutProcessor, evaluation_test

from eval_protocol.rewards.code_execution import extract_code_blocks, execute_python_code

```

* `typing`: Python's typing module for type hints (Any, Dict, List)

* `EvaluateResult`: Result object containing evaluation score and reasoning

* `EvaluationRow`: Data structure containing conversation messages and ground truth

* `Message`: Individual message in the conversation

* `default_single_turn_rollout_processor`: Default processor for single-turn conversations

* `evaluation_test`: Decorator for configuring evaluation tests

* `extract_code_blocks`: Function to extract Python code from markdown code blocks

* `execute_python_code`: Function to safely execute Python code and capture output

## Step 2: Create the Dataset Adapter

We need to convert the basic coding dataset format to the EP's expected format:

```python

def coding_dataset_to_evaluation_row(data: List[Dict[str, Any]]) -> List[EvaluationRow]:

"""

Convert entries from coding dataset to EvaluationRow objects.

This adapter combines the coding prompt with the test input to create

a complete user message, and stores the expected output as ground truth

for comparison during evaluation.

Args:

data: List of coding dataset entries with prompt, input, and expected_output

Returns:

List of EvaluationRow objects ready for evaluation

"""

return [

EvaluationRow(

messages=[Message(role="user", content=f"{row['prompt']} Input: {row['input']}")],

ground_truth=row["expected_output"]

)

for row in data

]

```

This adapter:

* Combines the coding prompt with the test input into a single user message

* Stores the expected output as ground truth for comparison

* Creates Message objects with the proper role and content structure

* Returns a list of EvaluationRow objects that the framework can process

**Key transformations:**

* **Message construction**: Combines prompt and input into clear instructions

* **Ground truth preservation**: Maintains expected output for exact comparison

* **Role assignment**: Sets proper user role for the coding request

## Step 3: Configure and Run the Evaluation

We use the `@evaluation_test` decorator to configure the evaluation:

```python

@evaluation_test(

input_dataset=["tests/pytest/data/basic_coding_dataset.jsonl"],

dataset_adapter=coding_dataset_to_evaluation_row,

completion_params=[{"model": "accounts/fireworks/models/kimi-k2-instruct", "temperature": 0.0, "max_tokens": 4096}],

passed_threshold=0.8,

rollout_processor=SingleTurnRolloutProcessor(),

num_runs=1,

mode="pointwise",

)

async def test_coding_code_evaluation(row: EvaluationRow) -> EvaluationRow:

"""

Evaluation function that tests code correctness by executing it locally.

This function:

1. Extracts Python code from the assistant's response

2. Executes the code locally with timeout=10

3. Compares the output to ground_truth

4. Returns a score of 1.0 if output matches, 0.0 otherwise

Args:

row: EvaluationRow containing the conversation messages and expected_output in ground_truth

Returns:

EvaluationRow with the evaluation result

"""

# Check if we have an assistant response

if len(row.messages) < 2 or row.messages[-1].role != "assistant":

row.evaluation_result = EvaluateResult(score=0.0, reason="No assistant response found")

return row

assistant_content = row.messages[-1].content or ""

expected_output = (row.ground_truth or "").strip()

# Extract Python code blocks

code_blocks = extract_code_blocks(assistant_content, language="python")

if not code_blocks:

row.evaluation_result = EvaluateResult(score=0.0, reason="No Python code block found")

return row

code = code_blocks[0]["code"]

# Execute the code locally

execution_result = execute_python_code(code, timeout=10)

if not execution_result.get("success", False):

error_msg = execution_result.get("error", "Code execution failed")

row.evaluation_result = EvaluateResult(score=0.0, reason=f"Execution error: {error_msg}")

return row

# Compare output with expected

actual_output = (execution_result.get("output", "") or "").strip()

if actual_output == expected_output:

row.evaluation_result = EvaluateResult(

score=1.0,

reason=f"✅ Output matches: '{actual_output}'"

)

else:

row.evaluation_result = EvaluateResult(

score=0.0,

reason=f"❌ Expected: '{expected_output}', Got: '{actual_output}'"

)

return row

```

**Configuration parameters:**

* `input_dataset`: Path to the basic coding dataset JSONL file

* `model`: The model to evaluate (Fireworks Kimi model in this case)

* `rollout_input_params`: Model parameters including temperature=0.0 for deterministic results

* `threshold_of_success`: 80% success rate threshold for the evaluation

* `mode`: `pointwise` for evaluating individual rows independently

* `dataset_adapter`: Function that converts coding format to EvaluationRow objects

* `rollout_processor`: Uses default single-turn processor for coding evaluations

**Evaluation process:**

1. **Validate response**: Ensure we have a valid assistant response containing code

2. **Extract code**: Use `extract_code_blocks` to find Python code in markdown blocks

3. **Execute safely**: Run the code in a secure environment with timeout protection

4. **Compare output**: Perform exact string comparison between actual and expected results

5. **Return score**: Provide binary score (1.0 for exact match, 0.0 for any difference)

## Core Functions Explained

### `extract_code_blocks` Function

The `extract_code_blocks` function identifies and extracts Python code from the model's response, typically from markdown code blocks.

**Key Features:**

* **Markdown parsing**: Identifies \`\`\`python code blocks in responses

* **Language filtering**: Can filter for specific programming languages

* **Content cleaning**: Removes verbose explanatory text that might interfere with execution

* **Multiple blocks**: Can extract multiple code blocks if present

**Function Signature:**

```python

def extract_code_blocks(text: str, language: Optional[str] = None) -> List[Dict[str, str]]:

```

**Parameters:**

* `text`: The assistant's response containing code

* `language`: Optional language filter (e.g., "python")

**Return Value:**

* List of dictionaries with "code" and "language" keys

**Example Usage:**

```python

response = """

Here's the solution:

\`\`\`python

def add_one(x):

return x + 1

\`\`\`

This function takes an integer and returns it incremented by 1.

"""

code_blocks = extract_code_blocks(response, language="python")

print(code_blocks[0]["code"]) # "def add_one(x):\n return x + 1"

```

### `execute_python_code` Function

The `execute_python_code` function safely executes Python code in a controlled environment with security restrictions and resource limits.

**Key Features:**

* **Secure execution**: Runs code in a subprocess with memory and time limits

* **Safety guards**: Disables dangerous operations like file system access

* **Timeout protection**: Prevents infinite loops and long-running code

* **Error handling**: Captures and reports execution errors clearly

* **Output capture**: Returns both stdout and stderr from execution

**Function Signature:**

```python

def execute_python_code(code: str, timeout: int = 5) -> Dict[str, Any]:

```

**Parameters:**

* `code`: Python code to execute

* `timeout`: Maximum execution time in seconds

**Return Value:**

* Dictionary with execution results including success status, output, and errors

**Example Usage:**

```python

code = """

def add_one(x):

return x + 1

result = add_one(5)

print(result)

"""

result = execute_python_code(code, timeout=10)

if result["success"]:

print(f"Output: {result['output']}") # "Output: 6"

else:

print(f"Error: {result['error']}")

```

### Security and Safety Features

The code execution environment includes several safety measures:

**Resource Limits:**

* **Memory limits**: Restricts memory usage to prevent excessive consumption

* **CPU limits**: Prevents long-running computations

* **Timeout enforcement**: Kills processes that exceed time limits

**Disabled Operations:**

* **File system access**: Prevents reading/writing files

* **Network operations**: Blocks network requests

* **System calls**: Disables potentially dangerous system operations

* **Process spawning**: Prevents creating new processes

**Error Handling:**

* **Exception capture**: Catches and reports Python exceptions

* **Timeout detection**: Identifies and reports timeout errors

* **Resource exhaustion**: Handles memory and CPU limit violations

## Evaluation Scenarios and Results

The basic coding evaluation handles various scenarios with different outcomes:

### Perfect Implementation (Score: 1.0)

**Scenario**: Model writes correct function that produces expected output

```python

# User prompt: "Write a Python function `add_one` that takes an integer and returns the integer incremented by 1. Input: 5"

# Model response:

def add_one(x):

return x + 1

result = add_one(5)

print(result)

```

**Result**: ✅ Output matches: '6' - Function correctly implements the required logic

### Syntax Error (Score: 0.0)

**Scenario**: Model writes code with syntax errors

```python

# User prompt: "Write a Python function `add_one` that takes an integer and returns the integer incremented by 1. Input: 5"

# Model response:

def add_one(x) # Missing colon

return x + 1

result = add_one(5)

print(result)

```

**Result**: ❌ Execution error: SyntaxError - Invalid Python syntax prevents execution

### Logic Error (Score: 0.0)

**Scenario**: Model writes syntactically correct but logically incorrect code

```python

# User prompt: "Write a Python function `add_one` that takes an integer and returns the integer incremented by 1. Input: 5"

# Model response:

def add_one(x):

return x + 2 # Wrong logic: adds 2 instead of 1

result = add_one(5)

print(result)

```

**Result**: ❌ Expected: '6', Got: '7' - Logic error produces wrong output

### Missing Function Call (Score: 0.0)

**Scenario**: Model defines function but doesn't call it with the input

```python

# User prompt: "Write a Python function `add_one` that takes an integer and returns the integer incremented by 1. Input: 5"

# Model response:

def add_one(x):

return x + 1

# Missing: result = add_one(5)

# Missing: print(result)

```

**Result**: ❌ Expected: '6', Got: '' - No output produced

### Runtime Error (Score: 0.0)

**Scenario**: Model writes code that fails during execution

```python

# User prompt: "Write a Python function `get_length` that takes a list and returns its length. Input: [1, 2, 3]"

# Model response:

def get_length(lst):

return lst.length() # Wrong method: should use len()

result = get_length([1, 2, 3])

print(result)

```

**Result**: ❌ Execution error: AttributeError - Runtime error during function call

### Edge Case Handling (Score: 1.0)

**Scenario**: Model correctly handles edge cases like empty lists or zero values

```python

# User prompt: "Write a Python function `get_length` that takes a list and returns its length. Input: []"

# Model response:

def get_length(lst):

return len(lst)

result = get_length([])

print(result)

```

**Result**: ✅ Output matches: '0' - Correctly handles empty list edge case

## Conclusion

This basic coding evaluation demonstrates how to assess AI models' programming capabilities using code execution and output comparison. The evaluation ensures models can write syntactically correct code, implement proper logic, handle various inputs, and produce exact expected outputs.

This evaluation is particularly valuable for:

* **AI model assessment**: Evaluating language models' programming capabilities

* **Code generation tools**: Validating the correctness of automatically generated code

* **Algorithm testing**: Ensuring implementations produce correct results

The basic coding evaluation focuses on **functional correctness** rather than code style or efficiency, making it essential for building reliable AI systems that can write working code. It provides objective scoring with secure execution, immediate feedback, and scalable automated testing.

# Function Calling Evaluation

Source: https://evalprotocol.io/example/function-calling

Evaluate function calling accuracy with exact tool match comparison

This example demonstrates how to create comprehensive function calling evaluations using the Eval Protocol (EP) framework. The evaluation uses the `exact_tool_match_reward` function to assess whether models correctly call the right functions with the correct arguments in the expected format.

You can find the complete code for this example at [test\_pytest\_function\_calling.py](https://github.com/eval-protocol/python-sdk/blob/main/tests/pytest/test_pytest_function_calling.py).

## Understanding Function Calling Evaluation

Function calling evaluation assesses a model's ability to:

* **Identify when to use tools**: Determine if a user query requires function execution

* **Select the correct function**: Choose the appropriate tool from available options

* **Provide accurate arguments**: Pass the right parameters with correct values

* **Follow proper formatting**: Use the expected tool call structure

Unlike text-based evaluations that focus on content generation, function calling evaluations test a model's **tool selection and parameterization capabilities** - critical skills for AI agents that interact with external systems.

## Understanding the Dataset Structure

The function calling dataset contains diverse test cases that evaluate different aspects of tool usage, from simple weather queries to complex nested object creation.

### Dataset Format

Each entry in the dataset contains:

* **`messages`**: Conversation history with user queries and assistant responses

* **`tools`**: Available function definitions with schemas

* **`ground_truth`**: Expected tool calls in JSON format

* **`evaluation_result`**: Pre-computed evaluation scores for validation

* **`input_metadata`**: Additional context including task type and difficulty

### Example Dataset Entries

**Perfect Match - Weather Query:**

```json

{

"messages": [

{"role": "user", "content": "What's the weather in London?"},

{

"role": "assistant",

"tool_calls": [

{

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather information for a location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "The city name"},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "Temperature unit"

}

},

"required": ["location", "unit"]

}

}

}

],

"ground_truth": "{\"tool_calls\": [{\"type\": \"function\", \"function\": {\"name\": \"get_weather\", \"arguments\": \"{\\\"location\\\": \\\"London\\\", \\\"unit\\\": \\\"celsius\\\"}\"}}]}",

"input_metadata": {

"row_id": "weather_london_perfect",

"dataset_info": {"task_type": "function_calling", "difficulty": "easy"}

}

}

```

**Argument Mismatch - Wrong Unit:**

```json

{

"messages": [

{"role": "user", "content": "What's the weather in London?"},

{

"role": "assistant",

"tool_calls": [

{

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\": \"London\", \"unit\": \"fahrenheit\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather information for a location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "The city name"},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "Temperature unit"

}

},

"required": ["location", "unit"]

}

}

}

],

"ground_truth": "{\"tool_calls\": [{\"type\": \"function\", \"function\": {\"name\": \"get_weather\", \"arguments\": \"{\\\"location\\\": \\\"London\\\", \\\"unit\\\": \\\"celsius\\\"}\"}}]}",

"input_metadata": {

"row_id": "weather_london_unit_mismatch",

"dataset_info": {"task_type": "function_calling", "difficulty": "easy"}

}

}

```

**Function Name Mismatch:**

```json

{

"messages": [

{"role": "user", "content": "What's the weather in London?"},

{

"role": "assistant",

"tool_calls": [

{

"type": "function",

"function": {

"name": "fetch_weather",

"arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather information for a location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "The city name"},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "Temperature unit"

}

},

"required": ["location", "unit"]

}

}

}

],

"ground_truth": "{\"tool_calls\": [{\"type\": \"function\", \"function\": {\"name\": \"get_weather\", \"arguments\": \"{\\\"location\\\": \\\"London\\\", \\\"unit\\\": \\\"celsius\\\"}\"}}]}",

"input_metadata": {

"row_id": "weather_london_name_mismatch",

"dataset_info": {"task_type": "function_calling", "difficulty": "easy"}

}

}

```

**No Tool Call Expected:**

```json

{

"messages": [

{"role": "user", "content": "Tell me a joke."},

{"role": "assistant", "content": "Why did the chicken cross the road?"}

],

"tools": [],

"ground_truth": "{\"tool_calls\": []}",

"input_metadata": {

"row_id": "joke_no_calls",

"dataset_info": {"task_type": "function_calling", "difficulty": "easy"}

}

}

```

**Complex Nested Object Creation:**

```json

{

"messages": [

{"role": "user", "content": "Create a user for John Doe"},

{

"role": "assistant",

"tool_calls": [

{

"type": "function",

"function": {

"name": "create_user",

"arguments": "{\"user\": {\"firstName\": \"John\", \"lastName\": \"Doe\", \"age\": 30}}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "create_user",

"description": "Create a new user",

"parameters": {

"type": "object",

"properties": {

"user": {

"type": "object",

"properties": {

"firstName": {"type": "string"},

"lastName": {"type": "string"},

"age": {"type": "number"}

},

"required": ["firstName", "lastName", "age"]

}

},

"required": ["user"]

}

}

}

],

"ground_truth": "{\"tool_calls\": [{\"type\": \"function\", \"function\": {\"name\": \"create_user\", \"arguments\": \"{\\\"user\\\": {\\\"firstName\\\": \\\"John\\\", \\\"lastName\\\": \\\"Doe\\\", \\\"age\\\": 30}}\"}}]}",

"input_metadata": {

"row_id": "create_user_nested",

"dataset_info": {"task_type": "function_calling", "difficulty": "hard"}

}

}

```

### Dataset Characteristics

**Test Scenarios**: The dataset covers various function calling challenges:

* **Perfect matches**: Correct function name and arguments

* **Argument mismatches**: Wrong parameter values (e.g., wrong temperature unit)

* **Function name errors**: Calling non-existent or wrong functions

* **Extra calls**: Making unnecessary tool calls

* **Missing calls**: Failing to call required functions

* **No-call scenarios**: Queries that don't require function execution

* **Complex objects**: Nested parameter structures

* **Invalid JSON**: Malformed argument strings

**Tool Types**: Various function categories:

* **Weather services**: Location-based queries with units

* **User management**: CRUD operations with complex objects

* **Data retrieval**: Search and find operations

* **Utility functions**: Simple parameterized operations

**Difficulty Levels**: Progressive complexity:

* **Easy**: Simple single-parameter calls

* **Medium**: Multi-parameter calls with validation

* **Hard**: Nested object structures and complex schemas

## Step 1: Import Required Dependencies

First, we import the necessary modules from the EP framework:

```python

import json

from typing import Any, Dict, List

from eval_protocol.models import EvaluationRow

from eval_protocol.pytest import SingleTurnRolloutProcessor, evaluation_test

from eval_protocol.rewards.function_calling import exact_tool_match_reward

```

* `json`: Python's JSON module for parsing ground truth data

* `typing`: Python's typing module for type hints (Any, Dict, List)

* `EvaluationRow`: The data structure containing conversation messages and metadata

* `default_single_turn_rollout_processor`: Default processor for single-turn conversations

* `evaluation_test`: Decorator for configuring evaluation tests

* `exact_tool_match_reward`: Built-in function calling evaluation function

## Step 2: Create the Dataset Adapter

We need to convert the function calling dataset format to the EP's expected format:

```python

def function_calling_to_evaluation_row(rows: List[Dict[str, Any]]) -> List[EvaluationRow]:

"""

Convert function calling dataset entries to EvaluationRow objects.

This adapter extracts the conversation messages, available tools, and ground truth

from the function calling dataset format and creates EvaluationRow objects that

the EP framework can process.

Args:

rows: List of function calling dataset entries

Returns:

List of EvaluationRow objects ready for evaluation

"""

dataset: List[EvaluationRow] = []

for row in rows:

dataset.append(

EvaluationRow(

messages=row["messages"][:1], # Only the user message

tools=row["tools"], # Available function definitions

ground_truth=row["ground_truth"] # Expected tool calls

)

)

return dataset

```

This adapter:

* Takes the raw function calling dataset as a list of dictionaries

* Extracts the user message (first message in the conversation)

* Includes the available tools/function definitions

* Sets the ground truth to the expected tool calls

* Returns the list of evaluation rows

**Key transformations:**

* **Message extraction**: Uses only the user message since the assistant's response will be generated during evaluation

* **Tool preservation**: Maintains the function schemas for context

* **Ground truth**: Preserves the expected tool calls for comparison

## Step 3: Configure and Run the Evaluation

We use the `@evaluation_test` decorator to configure the evaluation:

```python

@evaluation_test(

input_dataset=["tests/pytest/data/function_calling.jsonl"],

completion_params=[{"model": "accounts/fireworks/models/kimi-k2-instruct"}],

mode="pointwise",

dataset_adapter=function_calling_to_evaluation_row,

rollout_processor=SingleTurnRolloutProcessor(),

)

async def test_pytest_function_calling(row: EvaluationRow) -> EvaluationRow:

"""Run pointwise evaluation on sample dataset using pytest interface."""

ground_truth = json.loads(row.ground_truth)

result = exact_tool_match_reward(row.messages, ground_truth)

row.evaluation_result = result

print(result)

return row

```

**Configuration parameters:**

* `input_dataset`: Path to the function calling dataset JSONL file

* `model`: The model to evaluate (Fireworks Kimi model in this case)

* `mode`: `pointwise` for evaluating individual rows since each row can be evaluated independently

* `dataset_adapter`: Function that converts function calling format to EvaluationRow objects

* `rollout_processor`: Uses default single-turn processor for function calling evaluations

**Evaluation process:**

1. **Parse ground truth**: Convert the JSON string to a dictionary for comparison

2. **Extract tool calls**: The `exact_tool_match_reward` function analyzes the assistant's response

3. **Compare exactly**: Check if function names, arguments, and order match perfectly

4. **Return results**: Provide binary score (1.0 for perfect match, 0.0 for any mismatch)

## Core Functions Explained

### `exact_tool_match_reward` Function

The `exact_tool_match_reward` function is a built-in evaluation function that performs exact matching between generated and expected tool calls. It's located in `eval_protocol.rewards.function_calling`.

**Key Features:**

* **Exact matching**: Requires perfect alignment of function names, arguments, and order

* **Multiple formats**: Handles both structured tool calls and XML-formatted calls

* **JSON parsing**: Automatically deserializes and normalizes tool call arguments

* **Robust comparison**: Uses sorted JSON serialization for consistent comparison

* **Error handling**: Gracefully handles malformed inputs and edge cases

**Function Signature:**

```python

def exact_tool_match_reward(

messages: Union[List[Message], List[Dict[str, Any]]],

ground_truth: Optional[Dict[str, Any]] = None,

**kwargs: Any,

) -> EvaluateResult:

```

**Parameters:**

* `messages`: List of conversation messages (extracts tool calls from the last assistant message)

* `ground_truth`: Expected tool calls dictionary for comparison

* `**kwargs`: Additional parameters (not used in this implementation)

**Return Value:**

* `EvaluateResult` with score (1.0 for exact match, 0.0 for any mismatch) and detailed reasoning

**Example Usage:**

```python

result = exact_tool_match_reward(

messages=messages,

ground_truth={

"tool_calls": [

{

"type": "function",

"function": {

"name": "get_weather",

"arguments": '{"location": "London", "unit": "celsius"}'

}

}

]

}

)

print(f"Score: {result.score}") # 1.0 if exact match, 0.0 otherwise

print(f"Reason: {result.reason}") # Detailed explanation of the evaluation

```

### `eval_tool_call` Function

The core evaluation logic is implemented in the `eval_tool_call` function, which handles the detailed comparison of tool calls.

**Function Signature:**

```python

def eval_tool_call(generation: dict, ground_truth: dict) -> bool:

```

**Implementation Details:**

1. **Extract expected calls**: Parse ground truth tool calls from the expected format

2. **Process generated calls**: Handle both structured tool calls and XML-formatted calls

3. **Normalize formats**: Convert all calls to a consistent internal format

4. **Compare exactly**: Use JSON serialization with sorted keys for deterministic comparison

**Supported Formats:**

* **Structured tool calls**: Standard OpenAI format with `tool_calls` array

* **XML-formatted calls**: `...` tags in content

* **Mixed formats**: Combinations of different call types

### `compare_tool_calls` Function

The final comparison is performed by the `compare_tool_calls` function, which ensures exact matching.

**Function Signature:**

```python

def compare_tool_calls(generated_tool_calls: list, gt_tool_calls: list) -> bool:

```

**Comparison Logic:**

1. **Length check**: Number of tool calls must match exactly

2. **JSON serialization**: Convert each tool call to sorted JSON string

3. **Exact matching**: Compare serialized strings for perfect equality

4. **Order matters**: Tool calls must be in the same sequence

**Example Comparison:**

```python

# Generated calls

generated = [

{"name": "get_weather", "arguments": '{"location": "London", "unit": "celsius"}'}

]

# Expected calls

expected = [

{"name": "get_weather", "arguments": '{"location": "London", "unit": "celsius"}'}

]

# Result: True (exact match)

```

## Evaluation Scenarios and Results

The function calling evaluation handles various scenarios with different outcomes:

### Perfect Match (Score: 1.0)

**Scenario**: Model calls the exact function with correct arguments

```json

{

"generated": {"name": "get_weather", "arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"},

"expected": {"name": "get_weather", "arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"}

}

```

**Result**: ✅ Perfect match - all function names, arguments, and order are correct

### Argument Mismatch (Score: 0.0)

**Scenario**: Model calls correct function but with wrong arguments

```json

{

"generated": {"name": "get_weather", "arguments": "{\"location\": \"London\", \"unit\": \"fahrenheit\"}"},

"expected": {"name": "get_weather", "arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"}

}

```

**Result**: ❌ Argument mismatch - wrong temperature unit specified

### Function Name Error (Score: 0.0)

**Scenario**: Model calls wrong function name

```json

{

"generated": {"name": "fetch_weather", "arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"},

"expected": {"name": "get_weather", "arguments": "{\"location\": \"London\", \"unit\": \"celsius\"}"}

}

```

**Result**: ❌ Function name error - called non-existent function

### Extra Tool Call (Score: 0.0)

**Scenario**: Model makes unnecessary additional calls

```json

{

"generated": [

{"name": "get_weather", "arguments": "{\"location\": \"London\"}"},

{"name": "extra_call", "arguments": "{}"}

],

"expected": [

{"name": "get_weather", "arguments": "{\"location\": \"London\"}"}

]

}

```

**Result**: ❌ Extra tool call - made unnecessary additional function call

### Missing Tool Call (Score: 0.0)

**Scenario**: Model fails to call required function

```json

{

"generated": [],

"expected": [

{"name": "get_weather", "arguments": "{\"location\": \"London\"}"}

]

}

```

**Result**: ❌ Missing tool call - failed to call required function

### No Call Expected (Score: 1.0)

**Scenario**: Query doesn't require function execution

```json

{

"generated": [],

"expected": []

}

```

**Result**: ✅ No call expected - correctly avoided unnecessary function calls

## Advanced Features

### XML-Formatted Tool Calls

The evaluation supports XML-formatted tool calls embedded in content:

```python

# Assistant response with XML formatting

content = '{"type": "function", "function": {"name": "get_weather", "arguments": "{\\"location\\": \\"Berlin\\", \\"unit\\": \\"celsius\\"}"}}'

# The evaluation automatically parses and compares these calls

```

### Complex Nested Objects

The evaluation handles complex parameter structures:

```python

# Nested user object creation

{

"name": "create_user",

"arguments": '{"user": {"firstName": "John", "lastName": "Doe", "age": 30}}'

}

```

### Multiple Tool Calls

The evaluation supports scenarios with multiple sequential tool calls:

```python

# Multiple weather queries

[

{"name": "get_weather", "arguments": '{"location": "London"}'},

{"name": "get_weather", "arguments": '{"location": "Paris"}'}

]

```

## Best Practices for Function Calling Evaluation

### Dataset Design

* **Diverse scenarios**: Include various failure modes and edge cases

* **Progressive difficulty**: Start with simple calls and progress to complex objects

* **Real-world examples**: Use realistic function schemas and use cases

* **Clear ground truth**: Ensure expected tool calls are unambiguous

### Evaluation Configuration

* **Appropriate models**: Use models with strong function calling capabilities

* **Consistent parameters**: Use deterministic settings (temperature=0.0) for reproducible results

* **Adequate context**: Provide clear function descriptions and examples

* **Error handling**: Gracefully handle parsing errors and edge cases

### Result Interpretation

* **Binary scoring**: Understand that this is a strict exact-match evaluation

* **Detailed analysis**: Use the reasoning field to understand specific failures

* **Pattern recognition**: Look for systematic errors in function selection or argument formatting

* **Model comparison**: Compare different models' function calling accuracy

## Conclusion

This function calling evaluation example demonstrates how to create robust assessments of AI models' tool usage capabilities. The `exact_tool_match_reward` function provides a strict but comprehensive evaluation that ensures models can:

1. **Identify when tools are needed**: Distinguish between queries requiring function calls and those that don't

2. **Select appropriate functions**: Choose the correct tool from available options

3. **Provide accurate parameters**: Pass the right arguments with correct values

4. **Follow proper formatting**: Use the expected tool call structure consistently

This evaluation is particularly valuable for:

* **Agent development**: Ensuring AI agents can reliably interact with external systems

* **API integration**: Validating models' ability to use structured APIs correctly

* **Tool selection**: Testing models' understanding of when and how to use different tools

* **Parameter accuracy**: Verifying that models provide correct input values

The function calling evaluation complements other evaluation types by focusing on **execution accuracy** rather than content generation, making it essential for building reliable AI systems that can interact with external tools and APIs.

# GPQA (Open-Resource)

Source: https://evalprotocol.io/example/gpqa

Multiple-choice science QA with simple exact-match scoring

This example runs a minimal GPQA-style evaluation using the public Diamond split CSV. It’s meant for quick comparisons during model picking, not a full benchmark reproduction.

This example is implemented as a suite in `eval_protocol/benchmarks/suites/gpqa.py` and exported as `gpqa`.

## What it does

* Downloads the GPQA Diamond CSV and constructs MCQ prompts (A–D).

* Appends a system-side ground-truth token (e.g., `__GT__:A`) per row.

* Extracts the predicted letter from the assistant’s final message and checks exact match.

## How it’s configured

* `@evaluation_test` feeds prebuilt `input_messages` and sets rollout parameters.

* Simple scoring: 1.0 for exact letter match, else 0.0.

## Run it locally

After installing eval-protocol, you can run the benchmark from anywhere:

```bash

pytest --pyargs eval_protocol.benchmarks.test_gpqa -v \

--ep-print-summary --ep-summary-json artifacts/gpqa.json

```

Use `--ep-max-rows=20` to tune runtime. The CSV is fetched at runtime.

## Notes

* Convenience-oriented: focuses on a clean pipeline and minimal metrics.

* The evaluation relies on extracting exactly one of `A, B, C, D` from the model output.

# GSM8K Math Evaluation

Source: https://evalprotocol.io/example/gsm8k

Evaluate mathematical reasoning with GSM8K dataset using structured thinking format

This example demonstrates how to create a comprehensive math evaluation using the GSM8K dataset. The evaluation combines numerical accuracy checking with format validation, requiring models to follow a structured thinking format with `......` tags.

You can find the complete code for this example at [test\_pytest\_math\_example.py](https://github.com/eval-protocol/python-sdk/blob/main/tests/pytest/test_pytest_math_example.py).

## Understanding the GSM8K Dataset

The GSM8K (Grade School Math 8K) dataset contains grade school math word problems that test mathematical reasoning and problem-solving abilities. Each problem requires multi-step reasoning to arrive at the correct numerical answer.

### Dataset Format

Each entry in the dataset contains:

* **`id`**: Unique identifier for the test case

* **`user_query`**: The math word problem to solve

* **`ground_truth_for_eval`**: The expected solution with step-by-step reasoning and final answer

### Example Dataset Entries

**Basic Arithmetic Problem:**

```json

{

"id": "gsm8k_test_0",

"user_query": "Janet's ducks lay 16 eggs per day. She eats three for breakfast every morning and bakes muffins for her friends every day with four. She sells the remainder at the farmers' market daily for $2 per fresh duck egg. How much in dollars does she make every day at the farmers' market?",

"ground_truth_for_eval": "Janet sells 16 - 3 - 4 = <<16-3-4=9>>9 duck eggs a day.\nShe makes 9 * 2 = $<<9*2=18>>18 every day at the farmer's market.\n#### 18"

}

```

**Percentage and Profit Problem:**

```json

{

"id": "gsm8k_test_2",

"user_query": "Josh decides to try flipping a house. He buys a house for $80,000 and then puts in $50,000 in repairs. This increased the value of the house by 150%. How much profit did he make?",

"ground_truth_for_eval": "The cost of the house and repairs came out to 80,000+50,000=$<<80000+50000=130000>>130,000\nHe increased the value of the house by 80,000*1.5=<<80000*1.5=120000>>120,000\nSo the new value of the house is 120,000+80,000=$<<120000+80000=200000>>200,000\nSo he made a profit of 200,000-130,000=$<<200000-130000=70000>>70,000\n#### 70000"

}

```

### Dataset Characteristics

**Problem Types**: The dataset covers various mathematical concepts:

* Basic arithmetic (addition, subtraction, multiplication, division)

* Percentages and ratios

* Multi-step word problems

* Real-world applications (business, cooking, sports)

**Solution Format**: Ground truth solutions include:

* Step-by-step reasoning with intermediate calculations

* Computed values in `<>` format

* Final answer marked with `#### answer`

**Complexity**: Problems require:

* Understanding of mathematical concepts

* Multi-step reasoning

* Accurate numerical computation

* Clear presentation of work

## Step 1: Import Required Dependencies

First, we import the necessary modules from the EP framework:

```python

import re

from typing import Any, Dict, List

from eval_protocol.models import EvaluateResult, EvaluationRow, MetricResult

from eval_protocol.pytest import SingleTurnRolloutProcessor, evaluation_test

from eval_protocol.rewards.math import math_reward

from examples.math_example.main import check_think_answer_format

from tests.pytest.helper.gsm8k_to_evaluation_row import gsm8k_to_evaluation_row

```

* `re`: Python's regex module for pattern matching

* `typing`: Python's typing module for type hints (Any, Dict, List)

* `EvaluateResult`: The result object containing evaluation score and reasoning

* `EvaluationRow`: The data structure containing conversation messages and ground truth

* `MetricResult`: Individual metric results for detailed analysis

* `default_single_turn_rollout_processor`: Default processor for single-turn conversations

* `evaluation_test`: Decorator for configuring evaluation tests

* `math_reward`: Built-in math evaluation function

* `check_think_answer_format`: Function to validate structured thinking format

* `gsm8k_to_evaluation_row`: Adapter function to convert GSM8K dataset format

## Step 2: Create the Dataset Adapter

We need to convert the GSM8K dataset format to the EP's expected format:

```python

def gsm8k_to_evaluation_row(data: List[Dict[str, Any]]) -> List[EvaluationRow]:

"""Convert GSM8K dataset entries to EvaluationRow objects."""

return [

EvaluationRow(

messages=[Message(role="user", content=row["user_query"])],

ground_truth=row["ground_truth_for_eval"]

)

for row in data

]

```

This adapter:

* Takes the raw GSM8K dataset as a list of dictionaries

* Converts each row to an `EvaluationRow` with a user message containing the math problem

* Sets the ground truth to the expected solution with step-by-step reasoning

* Returns the list of evaluation rows

## Step 3: Define Format Validation

We create a function to check if the model's response follows the required structured thinking format:

```python

def check_think_answer_format(text: str) -> bool:

"""Check if text follows ...... format."""

if not text:

return False

pattern = r"[\s\S]*?[\s\S]*?[\s\S]*?"

return bool(re.search(pattern, text))

```

**Regex pattern explained:**

* `[\s\S]*?`: Matches the thinking section, including any characters and newlines

* `[\s\S]*?`: Matches any characters (including newlines) between the think and answer tags

* `[\s\S]*?`: Matches the answer section

* `re.search()`: Searches for the pattern anywhere in the text (not requiring it to be the entire text)

This ensures the response contains both `` and `` sections in the correct order.

## Step 4: Configure, implement, and run the evaluation

We use the `@evaluation_test` decorator to configure the evaluation. The evaluation function combines numerical accuracy with format validation.

```python

@evaluation_test(

input_dataset=["development/gsm8k_sample.jsonl"],

dataset_adapter=gsm8k_to_evaluation_row,

completion_params=[{"model": "accounts/fireworks/models/kimi-k2-instruct", "temperature": 0.0}],

max_dataset_rows=5,

passed_threshold=0.0,

rollout_processor=SingleTurnRolloutProcessor(),

mode="pointwise",

evaluation_test_kwargs=[

{"math_reward_kwargs": {"tolerance": 0.001, "absolute_tolerance": 1e-8, "require_units": False}}

],

)

def test_math_dataset(row: EvaluationRow, **kwargs) -> EvaluationRow:

"""

Evaluate math problem solving considering both accuracy and format.

This function demonstrates how to combine multiple evaluation criteria:

- Numerical accuracy using built-in math evaluation (80% weight)

- Format compliance checking for ...... structure (20% weight)

Args:

row: EvaluationRow containing the conversation messages and ground truth

**kwargs: Additional parameters (like math_reward_kwargs)

Returns:

EvaluationRow with the evaluation result

"""

# Get the assistant's response

assistant_message = row.messages[-1]

if isinstance(assistant_message, dict):

assistant_response = assistant_message.get("content", "")

else:

assistant_response = assistant_message.content or ""

# Evaluate numerical accuracy using built-in function

accuracy_result = math_reward(messages=row.messages, ground_truth=row.ground_truth, **kwargs["math_reward_kwargs"])

# Evaluate format compliance (looking for ...... format)

format_correct = check_think_answer_format(assistant_response)

format_score = 1.0 if format_correct else 0.0

# Calculate combined score with 80% accuracy and 20% formatting weight

combined_score = (0.8 * accuracy_result.score) + (0.2 * format_score)

# Create metrics structure expected by tests

metrics = {

"accuracy_reward": MetricResult(

score=accuracy_result.score,

reason=f"Numerical accuracy: {accuracy_result.reason}",

is_score_valid=True,

),

"format_reward": MetricResult(

score=format_score,

reason=f"Format compliance: {'correct' if format_correct else 'incorrect'} ...... structure",

is_score_valid=True,

),

}

row.evaluation_result = EvaluateResult(

score=combined_score,

reason=f"Combined score: {combined_score:.2f} (accuracy: {accuracy_result.score:.2f}, format: {format_score:.2f})",

metrics=metrics,

)

return row

```

**Key evaluation aspects:**

* **Numerical Accuracy**: Uses the built-in `math_reward` function to check if the final answer matches the ground truth (80% weight)

* **Format Compliance**: Ensures responses follow the structured thinking format (20% weight)

* **Weighted Combination**: Combines accuracy and format scores using 80% accuracy + 20% formatting weights

* **Detailed Metrics**: Provides separate scores for accuracy and format for detailed analysis

**Configuration parameters:**

* `input_dataset`: Path to the GSM8K sample dataset

* `dataset_adapter`: Function that converts GSM8K format to EvaluationRow objects

* `model`: The model to evaluate (Fireworks Kimi model in this case)

* `rollout_input_params`: Model parameters (temperature set to 0.0 for deterministic results)

* `max_dataset_rows`: Limit to 5 test cases for quick evaluation

* `threshold_of_success`: Set to 0.0 to see all results (can be adjusted based on requirements)

* `rollout_processor`: Uses default single-turn processor for math problems

* `mode`: `pointwise` for evaluating individual rows since each row can be evaluated independently

* `evaluation_test_kwargs`: Additional parameters for the evaluation function

## Core Functions Explained

### `math_reward` Function

The `math_reward` function is a built-in evaluation function that extracts numerical answers from text and compares them with expected values. It's located in `eval_protocol.rewards.math`.

**Key Features:**

* **Extracts numbers** from both model responses and ground truth using sophisticated regex patterns

* **Supports multiple formats**: integers, decimals, fractions, scientific notation, LaTeX formatting

* **Configurable tolerance**: Handles floating-point precision issues with `tolerance` and `absolute_tolerance` parameters

* **Unit handling**: Can require or ignore units with the `require_units` parameter

* **Robust matching**: Finds the best match between extracted answers when multiple numbers are present

**Function Signature:**

```python

def math_reward(

messages: List[Message],

*,

ground_truth: str,

tolerance: float = 0.001,

absolute_tolerance: float = 1e-8,

require_units: bool = False,

**kwargs: Any,

) -> EvaluateResult:

```

**Parameters:**

* `messages`: List of conversation messages (extracts from the last assistant message)

* `ground_truth`: Expected answer string containing the correct numerical value

* `tolerance`: Relative tolerance for floating-point comparisons (default: 0.001)

* `absolute_tolerance`: Absolute tolerance for very small numbers (default: 1e-8)

* `require_units`: Whether to require units to match (default: False)

**Return Value:**

* `EvaluateResult` with score (1.0 for correct, 0.0 for incorrect) and detailed reasoning

**Example Usage:**

```python

result = math_reward(

messages=messages,

ground_truth="18",

tolerance=0.001,

absolute_tolerance=1e-8,

require_units=False

)

print(f"Score: {result.score}") # 1.0 if answer matches, 0.0 otherwise

print(f"Reason: {result.reason}") # Detailed explanation of the evaluation

```

### `check_think_answer_format` Function

This function validates that the model's response follows the required structured thinking format with `` and `` tags.

**Function Signature:**

```python

def check_think_answer_format(text: str) -> bool:

```

**Implementation Details:**

* Uses regex pattern `r"[\s\S]*?[\s\S]*?[\s\S]*?"`

* `[\s\S]*?`: Matches the thinking section with any content

* `[\s\S]*?`: Matches any characters (including newlines) between sections

* `[\s\S]*?`: Matches the answer section with any content

* Returns `True` if both sections are present in the correct order, `False` otherwise

**Example Valid Format:**

```

Let me solve this step by step:

1. Janet's ducks lay 16 eggs per day

2. She eats 3 for breakfast

3. She uses 4 for muffins

4. So she sells: 16 - 3 - 4 = 9 eggs

5. At $2 per egg, she makes: 9 * 2 = $18

Janet makes $18 every day at the farmers' market.

```

**Example Invalid Formats:**

* Missing `` section: `18`

* Missing `` section: `Step by step reasoning...`

* Wrong order: `18reasoning...`

* No tags: "The answer is 18"

### `gsm8k_to_evaluation_row` Function

This adapter function converts the GSM8K dataset format to the EP framework's expected `EvaluationRow` format.

**Function Signature:**

```python

def gsm8k_to_evaluation_row(data: List[Dict[str, Any]]) -> List[EvaluationRow]:

```

**Input Format:**

```python

[

{

"id": "gsm8k_test_0",

"user_query": "Janet's ducks lay 16 eggs per day...",

"ground_truth_for_eval": "Janet sells 16 - 3 - 4 = <<16-3-4=9>>9 duck eggs..."

},

# ... more entries

]

```

**Output Format:**

```python

[

EvaluationRow(

messages=[Message(role="user", content="Janet's ducks lay 16 eggs per day...")],

ground_truth="Janet sells 16 - 3 - 4 = <<16-3-4=9>>9 duck eggs..."

),

# ... more EvaluationRow objects

]

```

**Key Transformations:**

* Extracts `user_query` and creates a `Message` with role "user"

* Uses `ground_truth_for_eval` as the ground truth for comparison

* Creates `EvaluationRow` objects that the EP framework can process

* Maintains the original problem structure while adapting to EP's expected format

## Expected Model Response Format

For optimal evaluation, models should respond in this structured format:

```

Let me solve this step by step:

1. Janet's ducks lay 16 eggs per day

2. She eats 3 for breakfast

3. She uses 4 for muffins

4. So she sells: 16 - 3 - 4 = 9 eggs

5. At $2 per egg, she makes: 9 * 2 = $18

Janet makes $18 every day at the farmers' market.

```

**Format requirements:**

* `` section: Detailed step-by-step reasoning

* `` section: Clear final answer

* Both sections must be present for format compliance

* Numerical accuracy is evaluated from the final answer

## Evaluation Results

The evaluation provides comprehensive feedback:

**Successful Response:**

* **Score**: 1.0 (0.8 x 1.0 + 0.2 x 1.0 = 1.0)

* **Reason**: "Combined score: 1.00 (accuracy: 1.00, format: 1.00)"

* **Metrics**: Both accuracy and format scores are 1.0

**Correct Answer, Incorrect Format:**

* **Score**: 0.8 (0.8 x 1.0 + 0.2 x 0.0 = 0.8)

* **Reason**: "Combined score: 0.80 (accuracy: 1.00, format: 0.00)"

* **Metrics**: Accuracy score 1.0, format score 0.0

**Incorrect Answer, Correct Format:**

* **Score**: 0.2 (0.8 x 0.0 + 0.2 x 1.0 = 0.2)